We conducted a load test on Oracle Cloud Infrastrcuture to benchmark and improve the performance of kuvasz-streamer.

The target of 10,000 tps and 1s latency was achieved after extensive tuning and re-architecting the streamer: the streamer now opens a fixed number of connections to the destination database and batches operations committing every one second.

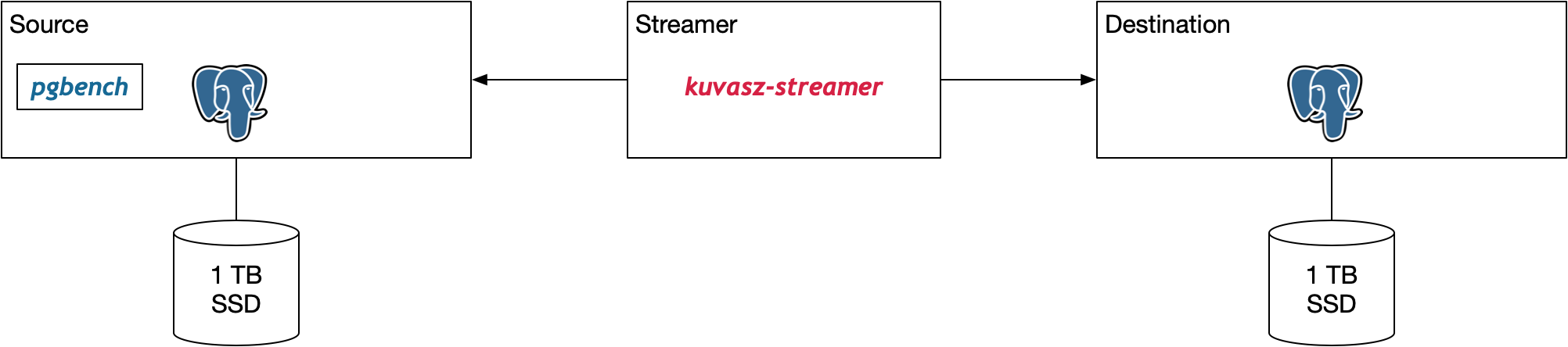

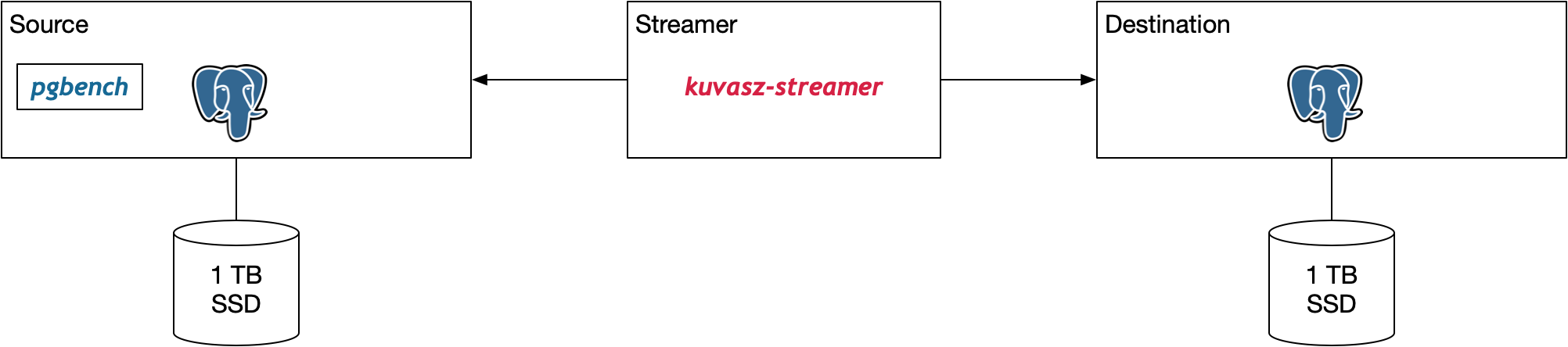

The test setup consists of a load generator and a source PostgreSQL instance on a VM, a streamer and a destination PostgreSQL instance. The source and destination databases have similar specifications.

kuvasz-streamer is an open source change data capture (CDC) project that focuses exclusively on Postgres. It is tightly integrated with Postgres Logical Replication to provide high performance, low latency replication.

Check out the source code on GitHub

Scalability

Understanding system scalability is crucial for building robust applications. This post explores the qualitative and quantitative approach to system. Before diving into the mathematical aspects of system scaling, let’s understand the two fundamental approaches to scaling: vertical and horizontal.

Availability

This blog is part of a series on robust system design. Check the other posts that address scalability, failures, latency and integration.

Introduction

In today’s digital landscape, where businesses and users expect seamless, uninterrupted access to services, ensuring high system availability is a critical concern for IT organizations. Modern IT systems comprise numerous interdependent components that exchange information, and their collective availability directly impacts the overall user experience and business continuity.

Handling overload

Any system has a specific maximum capacity under a certain type of workload. The underlying virtual or physical hardware limits this capacity.

Origins

The major components with hard limits are:

-

Processor: if too many processes are competing for CPU time, there will come a time when the operating system scheduler will have to pause certain processes and delay them.

-

Memory size: memory is different from processor and disk in the sense that it is a hard limit. If a process cannot allocate memory, it will simply crash. Operating system delay this from happening by using swap: using disk as a trade-off between speed and crashes.

-

Memory bandwidth: although harder to quantify and observe, memory bandwidth plays an important role in certain applications that manipulate large amounts of date in memory. The memory bandwidth limit may be hit and as a result, memory access will be delayed.

-

Storage bandwidth: any storage system has a finite bandwidth similar to memory. Once this limit is exceeded, the operating system queues the read/write requests.

-

Disk operations: in addition to raw bandwidth, a storage system has a limit on the number of read/write operations it can process. This limit is hard to quantify as it depends on the request size and mix. Again, queuing starts to happen when this limit is reached.

-

Network bandwidth: although it is rare to hit this limit, it is not impossible. Sustained transfer of large amounts of data can saturate the network interfaces and cause a process or other processes to stall.

Handling failures

This blog is part of a series on robust system design. Check the other posts that address scalability, failures, latency and integration.

Introduction

A large system consists of an end-user application (mobile, web) calling an exposed API. This API will translate into a number of internal and external API calls. We are considering the synchronous part of the system only: the services that are directly used by the API call.

sequenceDiagram

participant APP as Application

participant API as API Gateway

participant S1 as Service 1

participant S2 as Service 2

participant S3 as Service 3

APP->>API: POST /op1

API->>S1: POST /op1

S1->>S2: GET /info

S2-->>S1: OK

S1->>S3: POST /op2

S3-->>S1: OK

S1-->>API: OK

API-->>APP: OK